State-of-the-Art Web Archiving Techniques: Part I

Over the next few months, the Investigating and Archiving the Scholarly Git Experience (IASGE) team will explore how academics are using git hosting platforms and how Library and Information Science (LIS) professionals can effectively archive and make accessible the scholarship and scholarly ephemera hosted on platforms such as GitHub, GitLab, and Bitbucket.

My role within this project is to investigate archival and preservation methods for capturing, storing, preserving, and making accessible git repositories, including source code and its contextual ephemera. What I will be exploring ranges from self-archiving git repositories to fully programmatic means of capture. Some of my main research questions include: How can traditional archiving and preservation practices, such as appraisal and policy, inform how git is preserved? How can newer technologies, such as web archiving, be leveraged to include, support, and further the preservation of git for future use?

As I endeavor to answer these questions, I am also keeping in mind how these topics intersect with the scholarly git experience, something which my colleague Sarah Nguyen is exploring with her own set of research questions and analyses. As our research questions intersect, we hope to provide our community with a series of blog posts on our progress. In this blog post, I provide a brief history of web archiving. My next post will be a companion to this one that will include a deeper dive into web archiving technologies and explore how web archiving tools can be used to capture software and source code.

What is Web Archiving?

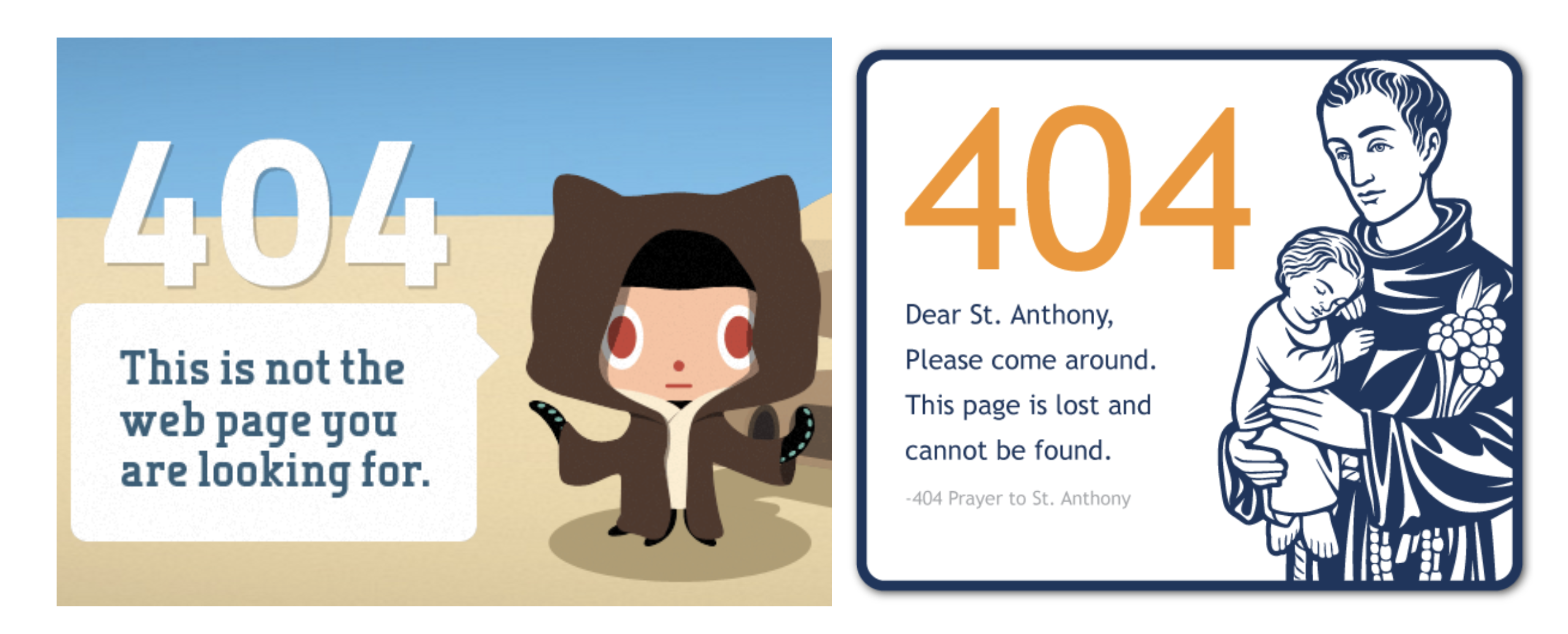

The process of web archiving is an essential way to capture, store, and preserve digital material hosted on the web. The need for, and importance of, web archiving stems from the reality that the web is neither a static nor a stable environment. In fact, it is an extremely ephemeral place that is prone to content drift (the movement of information from one url to another) and link rot (the inability of a url to (re)direct to an intended page). As early as the mid-1990’s, organizations and institutions began to recognize this ephemerality, and since then have made concerted efforts to capture, store, and preserve born-digital and digitized content hosted on the web for future use (Costa, Gomes, & Silva, 2017).

The history of web archiving can be put into context with the development of the World Wide Web. While working at CERN in 1991, Tim Berners-Lee developed the Hypertext Transfer Protocol (HTTP). By 1995, a later version of HTTP (HTTP/1.1) became an Internet standard used by many, if not most, browser developers (RFC 2068). Shortly after, in 1996, Brewster Kahle recognized that the many documents, texts, and images shared over the web were an important part of the cultural record. He also realized that no preservation plan for archiving this content was currently available. Comparing the loss of this content to the burning of the Library of Alexandria and the combustion of highly flammable film reels, Kahle look for ways to preserve this material programmatically (Lepore, 2015). Believing web-based material could be saved by “a small group of technical professionals equipped with a modest complement of computer workstations and data storage devices,” the Internet Archive was born (Kahle 1997).

Shortly after the Internet Archive was founded in 1996, the Library of Congress developed its own pilot web archiving program called MINERVA. The project, begun in 2000, is known today as the Library of Congress Web Archives. In collaboration with the Internet Archive, MINERVA was first designed to capture material related to the 2000 presidential election. After 9/11, however, the project quickly pivoted to capturing content related to the attacks and the developments which unfolded during the days, weeks, and months thereafter (Grotke, 2011). Web archiving initiatives that worked to capture the ephemeral web were not, however, limited to the United States. Australia’s Web Archive (1996), Sweden's Kulturarw3 (1996), the New Zealand Web Archive (1999), Rhizome (1999), and the Czech Republic’s WebArchiv (2001) also developed during this same period (Costa, Gomes, & Silva, 2016). An interesting component to this growth is the connection between national libraries and web archives with regards to legal deposit. Legal deposit requires that a person, group, or organization deposit published works, often to a national or governmental library. Legal deposit material is available to researchers but is often subject to access approval as well as regulatory and site restrictions. In the digital-age, laws have been modified to include non-print, digital publications, such as websites. Institutions such as the Danish Netarchive.dk and the UK Web Archive, for example, capture material and allow for compliance with legal deposit laws within each respective country (Webster, 2017). The UK Web Archives (one of the six Legal Deposit Libraries in the UK) ingests “any and all UK based websites“ with top-level domains such as .uk, .scot, .wales, .cymru and .london. To capture much of their content, the UK Archives conduct a massive annual domain crawl, along with daily captures of frequently updated websites, such as news sites. As a result, the nearly simultaneous development of multiple web archiving efforts—to capture content and to meet legal requirements—demonstrates a global and largely contemporaneous commitment to capturing, saving, and preserving web content.

To support the many web archiving initiatives found both nationally and internationally, many professional organizations, working groups, and consortia have come together to advance both large and small web archiving efforts. The The International Internet Preservation Consortium (IIPC), for instance, was formed in 2003 at the National Library of France (BnF) and included 12 participating institutions. Currently, there are 56 IIPC members from 45 different countries. In addition, the National Digital Stewardship Alliance (NDSA) was formed in 2010 and, through their web archiving research group and global survey of web archiving initiatives, have continue to foster web archiving efforts for over a decade (Antracoli, Duckworth, Silva, & Yarmey 2014).

Many organizations have also noted affiliations with the Society of American Archivists’ Web Archiving Section (SAA WebArchRT), which advocates and supports web archiving from selection to preservation, as well as other organizations such as Ivy Plus Libraries Web Resources Collection Program, the Federal Web Archiving Working Group, and Cobweb (Farrell, McCain, Praetzellis, Thomas, & Walker, 2018). National and international consortial efforts to support web archiving have been met by other types of institutions as well. Governmental agencies, cultural heritage institutions, and academic libraries, for instance, have incorporated web archiving into their preservation efforts and workflows. Web archiving initiatives, as a result, have significantly risen since 2003 (Gomes, Miranda, & Costa 2011).

In my next blog post, I will discuss various web archiving technologies most commonly used in libraries and archives. In particular, I will provide brief overviews of state-of-the-art tools such as Heritrix, Archive-It, and Webrecorder. I will also discuss various policies and protocols involved in the processes of content capture. Finally, I will discuss the ways in which web archiving can be used to capture software and source code that are housed in web-based hosting platforms.

About Me

I’m Genevieve Milliken, Research Scientist: LIS for the Investigating and Archiving the Scholarly Git Experience (IASGE) project at New York University. I recently graduated from Pratt Institute’s School of Information, where I received my MSLIS with Advanced Certificate in the Digital Humanities. I am also a web archiving technician at New York Art Resources Consortium (NYARC). Through a series of blog posts, I will be discussing various ways of capturing, saving, and preserving software and source code, especially as it pertains to academics and their scholarly pursuits. Please check back for future blog posts on self-archiving, software preservation, and much, much more. @gen_milliken | genevieve.milliken@nyu.edu

Bibliography

Antracoli, A., Duckworth, S., Silva, J., & Yarmey, K. (2014). Capture All the URLs: First Steps in Web Archiving. Pennsylvania Libraries: Research & Practice, 2(2), 155–170. https://doi.org/10.5195/palrap.2014.67

Costa, M., Gomes, D., & Silva, M. J. (2017). The evolution of web archiving. International Journal on Digital Libraries, 18(3), 191–205. https://doi.org/10.1007/s00799-016-0171-9

Farrell, M., McCain, E., Praetzellis, M., Thomas, G., & Walker, P. (2018). Web Archiving in the United States: A 2017 Survey [Report]. https://doi.org/10.17605/OSF.IO/3QH6N4

Gomes D., Miranda J., Costa M. (2011) A Survey on Web Archiving Initiatives. In S. Gradmann, F. Borri F., C. Meghini, & H. Schuldt (Eds.), Research and Advanced Technology for Digital Libraries. TPDL 2011. Lecture Notes in Computer Science, vol 6966. Berlin, Heidelberg: Springer.

Grotke, A. (2011). Web Archiving at the Library of Congress. Computers in Libraries, 31(10), pp. 15-19. Retrieved from http://www.infotoday.com/cilmag/dec11/Grotke.shtml

Jones, S. M., Sompel, H. V. de, Shankar, H., Klein, M., Tobin, R., & Grover, C. (2016). Scholarly Context Adrift: Three out of Four URI References Lead to Changed Content. PLOS ONE, 11(12), e0167475. https://doi.org/10.1371/journal.pone.0167475

Kahle, B. (1997). Preserving the Internet. Scientific American, 276(3), 82-83. Retrieved from https://www.jstor.org/stable/24993660

Weber, P. (2017). Users, technologies, organisations: Towards a cultural history of world web archiving. In N. Brugger (ed.), Web 25. Bern, Switzerland: Peter Lang US.